In this post I’m going to talk about linked lists, a seemingly trivial subject that many programmers — even good ones — seem to get terribly wrong!

Then I’m going to share techniques (with source code) to make your game engine code simpler, faster, more memory efficient and more reliable. Wow!

This post is part of a three-part article:

- Part 1: Why Starcraft crashed frequently during development

- This post: How we could have fixed the most common causes

- Part 3: Explaining the implementation details of the fix

TL;DR: Here’s the source code which implements an intrusive doubly-linked list that’s better than std::list and boost intrusive lists (for some definition of better).

Impetus: someone is wrong on the Internet

I was reading an article by Martin Sústrik, one of the lead developers of ZeroMQ, a network coding library I’ve both used and recommended. It rocks for coding server-to-server network interactions, and is included in awesome projects like Mongrel 2.

Martin recently posted about why he should have written ZeroMQ in C instead of C++, which turned out to be my impetus for writing this series of three articles. In his second article on the subject he spent the majority of the post writing about the failures of the C++ Standard Template Library linked list (STL std::list library), and drew the conclusion that he could have done a better job using the C language.

As much as I respect the work he’s done developing ZeroMQ, I think there’s a better answer than re-architecting the library in C.

Using std::list

Here’s how a programmer declares a linked list using STL (cribbed from the aforementioned article):

struct person {

unsigned age;

unsigned weight;

};

std::list <person*> people;

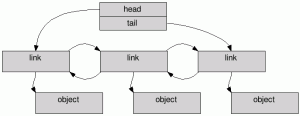

After adding a few members to the linked list, we’d get something that looks like this in memory:

Here is code that safely removes a person record from the linked list:

// NOTE: O(N) algorithm

// -- requires list scanning

// -- requires memory deallocation

void erase_person (person *ptr) {

std::list <person*>::iterator it;

for (it = people.begin(); it != people.end(); ++it) {

if (*it != ptr)

continue;

people.erase(it);

break; // assume person is only linked once

}

}

Tragically, this unlinking code is awful: for a list with N entries, on average it is necessary to scan N/2 entries to find the element we’re interested in removing, which is why a linked list is a bad choice for storing records that need to be accessed in random order.

More importantly, it’s necessary to write list-removal functions like the code above, which takes programmer dev-time, slows compilation, and can have bugs. With a better linked-list library, this code is entirely unnecessary.

Using intrusive lists

Let’s talk about an alternative to std::list using intrusive lists.

Intrusive lists are ones that require the “link” fields to be embedded directly into the structure being linked. Unlike externally linked lists, where a separate object is used to hold a pointer to the object and previous/next links, the intrusive list makes its presence known in the structure being linked.

Here’s code that re-implements the example above so that it use intrusive links:

struct person {

TLink link; // The "intrusive" link field

unsigned age;

unsigned weight;

};

TListDeclare<person, offsetof(person, link)> people;

I use a #define macro to avoid code-duplication and typo-errors, so my list definition would actually look like this:

LIST_DECLARE(person, link) people;

In comparison to std::list, when viewing the memory layout you can see that there are fewer objects allocated in memory:

Moreover, the code to remove an element from the list is both simpler and faster, and does not require memory deallocation either:

// NOTE: O(1) algorithm

// -- no list traversal

// -- no memory deallocation

void erase_person (person *ptr) {

ptr->link.Unlink(); // hmm... must be some magic in there

}

And even better, if you delete a record that’s part of an intrusive list, it automatically unlinks itself from the list:

void delete_person (person *ptr) {

// automagically unlinks person record no matter

// which linked list it is contained within

delete ptr;

}

Why intrusive lists are better

If you’ve made it this far, you probably have an inkling about why intrusive lists are better than externally-linked lists in many cases; here are the ones I’ve come up with:

- Because the link fields which are used to include an object within a linked-list are embedded within the object itself it is no longer necessary to allocate memory to link an item onto a list, nor deallocate memory when unlinking.

ApplicationSpeed++.MemoryUtilization–. - When traversing objects stored on an intrusive linked list, it only takes one pointer indirection to get to the object, compared to two pointer indirections for std::list. This causes less memory-cache thrashing so your program runs faster — particularly on modern processors which have huge delays for memory stalls.

ApplicationSpeed++.CacheThrashing–. -

We reduce code failure paths because it is no longer necessary to handle out-of-memory exceptions when linking items.

CodeObjectSize--.CodeComplexity–. -

Most importantly, objects are automatically removed from the lists they’re linked into, eliminating many common types of bugs. `ApplicationReliability++.

How do I link an object to multiple lists?

One of the great benefits of intrusive lists is that they just work. Let’s see how:

struct employee {

TLink<employee> employeeLink;

TLink<employee> managerLink;

unsigned salary;

};

struct manager : employee {

LIST_DECLARE(employee, managerLink) staff;

};

LIST_DECLARE(employee, employeeLink) employees;

void friday () {

// Hire Joe, a cashier

employee * joe = new employee;

joe->salary = 50 * 1000;

employees.LinkTail(joe);

// Hire Sally, a shift manager

manager * sally = new manager;

sally->salary = 80 * 1000;

employees.LinkTail(sally);

sally->staff.LinkTail(joe);

// Oops, Joe got caught with his hand in the till

delete joe;

// Now that Joe is gone, Sally has no reports

ASSERT(sally->staff.Empty());

// And she is now the only employee

ASSERT(employees.Head() == sally);

ASSERT(employees.Tail() == sally);

}

Pretty nifty, huh? You can see how a lot of common errors can be avoided when cleanup is automatic.

There’s a fly in my soup

Some folks might be concerned that their objects now contain those intrusive link fields which are unrelated to the data the object is supposed to contain. The person record is now “polluted” with external “stuff”.

This does make it harder to do leet-programmer stuff like directly writing the record to disk (can’t safely write pointers), using memcmp to compare objects (those pesky pointers again) and similarly hackish stuff. But you shouldn’t be doing that anyway. Reliability is more important than speed, and if you’re reduced to using those hacks for speed-gains your program needs help. Remember Y2K bugs!

Where is my object linked?

When using intrusive lists your program must declare which lists a record will be embedded within when declaring those structures. In most cases this is trivial, but some cases require finesse:

// Some3rdPartyHeaderYouCannotChange.h

struct Some3rdPartyStruct {

// lots of data

};

// MyProgram.cpp

struct MyStruct : Some3rdPartyStruct {

TLink<MyStruct> link;

}

LIST_DECLARE(MyStruct, link) mylist;

Of course if you don’t control the structure definition nor the code where it is allocated, which might be true when working with third-party libraries you can fall back to using std::list.

Cautions for threaded code

When writing multi-threaded code it is important to remember that a delete operation will call the destructors for each intrusive link field to remove that element from the list.

If the object being deleted has already been unlinked from all lists, then no problem. But if the object is still linked to a list it will be necessary to use locks to protect against race conditions. Here are some solutions from worst to best:

// Wrap destructor with a lock to avoid race condition

// Downsides:

// lock held while calling destructor

// lock held during memory free

void threadsafe_delete_person (person *ptr) {

s_personlock.EnterWrite();

{

delete ptr;

}

s_personlock.LeaveWrite();

}

-- or --

// Wrap unlink with lock to avoid race condition.

// Avoids downsides of the solution above, but

// Unlink() will be called again safely but

// unnecessarily in the TLink destructor.

void threadsafe_delete_person (person *ptr) {

s_personlock.EnterWrite();

{

ptr->link.Unlink();

}

s_personlock.LeaveWrite();

delete ptr;

}

-- or --

// Same as above, but less fragile; since the

// unlinking is done in the destructor it is

// impossible to forget to unlink when calling

// delete

person::~person () {

s_personlock.EnterWrite();

{

link.Unlink();

}

s_personlock.LeaveWrite();

}

-- or --

// Same as above but reduces contention on lock

person::~person () {

if (link.IsLinked()) {

s_personlock.EnterWrite();

{

link.Unlink();

}

s_personlock.LeaveWrite();

}

}

You can probably tell I’ve done this a lot, huh?

Assignment and copy-construction for links and lists

It’s not possible to copy-construct or assign objects linked into intrusive lists, nor to do the same for the lists themselves. In practice this is not a limitation that you’ll run into. For the special case where it is necessary to move items from one list to another, it’s possible to write a function to splice elements from one list onto another.

Why not use boost intrusive lists?

Boost intrusive list.hpp implements a similar intrusive list to the one I wrote; it is designed to solve every linked list problem you’ve ever had, and consequently it’s daunting to use. Boy howdy did they make it complicated — unnecessarily so, I think.

If you read the source code, I hope you’ll find it straightforward. For a start, the entire intrusive linked-list, including the comments and MIT license, clocks in at under 500 lines.

Compare this with boost intrusive list.hpp — which admittedly does have more functionality — at 1500 lines not including the eleven (11!!) subsidiary headers filled with all that unreadable modern C++ templately bollocks.

Example use-case where std::list would break down

Here’s an example of some code I implemented in ArenaSrv and StsSrv, the server frameworks I wrote that are used for almost all Guild Wars services (both GW1 and GW2), but rewritten for clarity and brevity.

The code is designed to prevent a particular type of attack against a network service called Slowloris. What Slowloris does is to gradually connect lots of sockets to a single network service until it has eventually saturated the server with connections, at which point the server generally stops behaving properly. Apache web servers are particularly vulnerable to Slowloris, though many other network services have similar issues.

//*********** SLOWLORIS PREVENTION FUNCTIONS ***********

// Mark connection so it will be closed "soon" unless

// a complete message is received in the near future

void Slowloris_Add (Connection * c) {

s_pendingCritsect.Enter();

{

// List is kept in sorted order; newest at the tail

s_pendingList.LinkTail(c);

c->disconnectTime = GetTime() + DEFEAT_SLOWLORIS_TIME;

}

s_pendingCritsect.Leave();

}

// Remove connection from "close-me-soon" list

void Slowloris_Remove (Connection * c) {

s_pendingCritsect.Enter();

{

s_pendingList.Unlink(c);

}

s_pendingCritsect.Leave();

}

// Periodically check "close-me-soon" list

void Slowloris_CheckAll () {

s_pendingCritsect.Enter();

while (Connection * c = s_pendingList.Head()) {

// Since the list is sorted we can stop any

// time we find an entry that has not expired

if (!TimeExpired(GetTime(), c->disconnectTime))

break;

s_pendingList.Unlink(c);

c->DisconnectSocket();

}

s_pendingCritsect.Leave();

}

//*********** SOCKET FUNCTIONS ***********

void OnSocketConnect (Connection * c) {

Slowloris_Add(c);

}

void OnSocketDisconnect (Connection * c) {

Slowloris_Remove(c);

delete c;

}

void OnSocketReadData (Connection * c, Data * data) {

bool msgComplete = AddData(&c->msg, c->data);

if (msgComplete) {

Slowloris_Add(c);

ProcessMessageAndResetBuffer(&c->msg);

}

}

You wouldn’t want to use std::list for this type of problem because, each time it became necessary to remove a connection from the “close-me-soon” list it would be necessary to traverse 50% of the list (on average). Since some Guild Wars 1 services hosted as many as 20K connections in a single process, this would entail scanning through 10000 list items — not a good idea!

Credit where credit is due

I didn’t invent this linked-list technique. The first time I encountered it was in Mike O’Brien’s code for Diablo, included in Storm.dll. When Mike and I started ArenaNet with Jeff Strain, the first code that Mike wrote was a better version of that linked-list code.

Upon leaving ArenaNet, I discovered that after ten years of coding with intrusive lists — and the attendant lack of worry about silly linked-list bugs — I needed to re-implement the code again because no better alternatives existed (boost notwithstanding). Some trial and error ensued!

In order to avoid this constant rewriting, I have open-sourced the code using the MIT license, which means you get to use it with no commercial limitations.

Conclusions

Well there you go: an explanation of the uses of intrusive lists. They’re awesome for writing more reliable code because they cleanup after themselves (caveat: multithreaded code).

The programming team that implemented Guild Wars included more than ten coders who were recent college graduates. Had we turned them loose on a game engine to write code using std::list the bug count would have been much higher — meaning no offense to those programmers at all; they’re great folks. By giving them access to tools like these we were able to write 6.5 million lines of code — a huge scope for a game — with exceptional stability and reliability.

A key goal of good programming is to increase reliability. By creating collection classes that “just work” we can go a great distance towards that end.

End of part 2

In part 3 of this article I plan to answer the critical question: how does it all work?

For the impatient, check out the implementation here in List.h.

Thanks for great article!

IMO, std::list now can be used effectively as intrusive list – use std::list instead of std::list. :)

Of course, before C++11, std::list has its own performance penalty – there is no way to avoid overhead from copy constructor. But C++11 introduces emplacement initialization for every container, std::list can be considered as efficient as other intrusive container.

No it can’t. How do you do O(1) removal if you have a pointer or reference to the object contained in the list? You would have to use iterators to reference the objects everywhere, instead of using the object directly. Also, how do you make an object part of several lists?

Yes you have to use iterators instead of pointers – but neither of those is “using the object directly”. You just tradeoff one indirection for another. (& you can hide either behind a typedef if you like).

You’re right that it doesn’t apply to an element in several lists simultaneously. Though that’s going to be interesting anyway – you’re going to have to implement, somewhere, a walk of all the link pointer pairs to determine if an element has been removed from all the lists.

Realistically, I find that objects aren’t usually shared so broadly – usually only one of those lists is the owner. In which case I’d use value semantics in that list, and collections of iterators in the rest. Though, admittedly that’s going to cost me space for those side lists. (but equally those side lists might not be lists – perhaps in those other containers I have different lookup requirements (perhaps a priority queue to keep those connections in a quick “pop lowest” configuration)).

Admittedly, Patrick’s solution might be applicable to particularly perf & space sensitive data structures, which is his target audience I suppose – but I’d be cautious of recommending it as the baseline approach to data structure usage in C++.

I don’t think anybody was recommending intrusive containers as the baseline approach to data structures in C++. I’d rather say the baseline approach is to be aware of the tradeoffs between the various data structures and their implementations and choose accordingly. There are plenty of cases when std::list is fine, but just because it’s part of the language spec doesn’t mean that it’s the Ultimate List Implementation.

Would you actually write code that passes iterators around? When I said “using the object directly” I wasn’t referring to levels of indirection, I meant that you can pass around pointers or references to your object. I think it’s a bit of a stretch to typedef an iterator to ConnectionHandle or ConnectionRef and pass that to functions instead of Connection&. This gets even weirder when you have the object inside multiple lists. Now you have a ConnectionList1Handle and ConnectionList2Handle and you have to remember which one to use. And let’s hope you never need to operate on both lists in the same function, as then you would have to send the object twice, but wrapped in different iterators. The alternative is to store iterators for each list inside the Connection struct, which starts to look like intrusive containers done wrong (and has more overhead).

The other thing about extra allocations still applies if you have multiple lists. Indeed, if the object is owned by a list, you don’t store a pointer to it, you store the object itself and you get only one allocation per element. However, you still get one allocation for each “reference” list the object belongs to, which is not great. You can easily write a 12-byte (or 24-byte, on 64-bit) block allocator which will make such allocations almost free, but I think that’s just trying to prop up a bad solution with ridiculous crutches.

In the end, what do you get in exchange for these crutches and weird-looking code? You haven’t reinvented node linking and unlinking in a list. Great, 6 lines of trivial code. You don’t have to write your own iterator. Great, another 20 lines of trivial code. In exchange for this, you have to write a small block allocator and you have to remember to use an alien-looking typedef instead of a simple pointer.

If you had to write a lock-free list, would you also try to base it on std::list, just because std::list contains the magic node linking code that you don’t want to reinvent?

I wouldn’t mean to suggest that std::list in any more a panacea than TList above. Though I would tend to defer to non-intrusive and pre-made data structures “by default” unless I had a particular need/justification to avoid them. The conflation of containment with contents is something that can be exploited in some specific use cases but could rapidly become a maintenance burden if types may appear in widely disparate lists and only at certain times – now you’re breaking through various abstractions to modify one implementation for the sake of what should be orthogonal clients. Sometimes the loss of abstraction is worthwhile, sometimes not – usually I’ll assume it’s not until shown evidence to the contrary. Certainly Patrick (& others) know that particular parts of game engines/servers/etc will generally be liable to these problems & they’ll be better informed to choose a more niche data structure off the bat.

For what it’s worth I do/have/will write code that passes iterators around, when it’s relevant. I wouldn’t usually suggest it as the general handle because most clients won’t generally need to be concerned with (or even indirectly invoke actions) that remove the element from the primary sequence. For those that do need such operations it’s more than just a matter of “I want to operate on this object”.

Yes, what I described isn’t necessarily applicable to a multiple-container scenario. (though, again, I wouldn’t expect all clients to be dealing in adding/removing elements from all containers – most clients don’t need such abilities & thus wouldn’t need iterators but simply references)

Yes, it also doesn’t apply (or only applies with a tradeoff) there because of overhead in the referential containers. This is a bit more interesting though – as even with the proposed intrusive solution you’re making a different time/space/space tradeoff: what if many elements only exist in the primary (or a single arbitrary) list but very infrequently exist in the secondary list (such as the Slowloris queue shown above)? You’re wasting 2 pointers of space for all those objects that aren’t in your secondary lists – sure, it might be a valid tradeoff, but it’s something to consider if those few bytes matter to you enough to do intrusive systems in the first place.

Iterators are a bit more than 20 lines of trivial code ( http://blogs.msdn.com/b/vcblog/archive/2010/08/09/the-filterator.aspx ) & provide more functionality than the ability to navigate from one node to the next. Their interoperability with standard (& non-standard, but similarly contracted) algorithms means more reusable and legible code. Writing intent instead of explicit code makes a big difference in readability to me, anyway. (std::any_of, std::find, etc – trivial to write, yes, but when reading code the intent is clear as soon as you read the name rather than having to parse out the logic of 5 lines of looping & testing code)

fwiw: A block allocator probably wouldn’t go astray in any fairly large project…

No, I probably wouldn’t try to implement a lock-free list on top of std::list. I’d probably try to implement it as an alternative to std::list, though – with similar separation from its element type and, as much as is reasonably (this will vary, of course) possible, with similar contracts/features as standard containers. For ease of use, reusability, and interoperability.

I agree on all points, particularly on keeping the same interface as the std containers when you roll your own, since it’s a well designed interface and most people are familiar with it (and makes your think compatible with std algorithms and whatnot).

There are too many programmers out there who try to solve problems by looking for a SolveMyProblem() function in some library, without stopping to think how that library does it and if it’s appropriate in their case. It’s a good thing when someone points out that the standard library isn’t a panacea. Trying to always squeeze a round library through a square problem is just as hurtful as the NIH syndrome.

I think the statement about doing it wrong shouldn’t be taken literally. It’s tongue-in-cheek and it’s followed by the obviously sarcastic comment about turning into an elite coder by using intrusive lists. That’s not the takeaway here and people should read and understand the rest of the article, not stop at the grumpy old programmer intro.

PS: yeah, you always end up with a small block allocator in a game, but using it to cover the inefficiency of per-element allocation is still a kludge (and it shouldn’t be the reason why you decide to have that allocator in the first place). If there’s a solution which doesn’t require as many kludges, I’d go with that instead.

Oh, and for what it’s worth, the original article was fairly specific: “If you’re a programmer who uses the C++ STL std::list, you’re doing it wrong.” (in response to your “I don’t think anybody was recommending intrusive containers as the baseline approach to data structures in C++. ” – so, as far as lists go, Patrick does seem to be recommending intrusive containers as the baseline approach)

Why use a list at all when for many of the operations described can be done in log(n) time with better data structures?

Why do something in O(logN) time when you can do it in O(1)?

Because the best case of an O(logN) is better than that of a O(1) data structure/algorithm…

How can it be better than O(1) especially in the case with linked lists where you just delete a couple of pointers?

It’s finished before you start it!

By this time I should have learned that I should favour composition over inheritance but…

Why declare the macro with offests instead of declaring a base class that the items in the list can inherit. You can put the links in this base class. It can accomodate the clean up code so your actual classes will not be polute by the list logic. I think you can even make a single base class that works for all possible lists the item can be put into by puting the links for each of the lists into a list (or hashtable) in the base class.

Because if you inherit you can only have a type in a single list at a time. This implementation allows your types to be in multiple lists simultaniously. Throwing in a hash table or list of pointers for this is an absurd complexity to add just for OOP purity.

Using CRTP, and another template parameter (a tag class by example), you can add whatever number of list.

And, then, you don’t need the offset computation what will certainly be wrong in case of virtual inheritance.

Even a separate class for every list type seems beneficial and the complexity seems OK provided that it is contained in a single base class. If I understand the article correctly you need to copy/paste the destructor code in every item class. It seems like generalized destructor logic can be beneficial. You can also do template magic but templates in C++ are… magic.

Is the something I don’t understand about this code (where is the “automatic” unlinking?)

And even better, if you delete a record that’s part of an intrusive list, it automatically unlinks itself from the list:void delete_person (person *ptr) {

// automagically unlinks person record no matter

// which linked list it is contained within

delete ptr;

}

The destructor for TLink calls RemoveFromList() on itself. Which means that deleting an object that contains a TLink also removes the object from any list in which it happened to be linked.

The moral I hope people take away from this article – even those who aren’t C++ programmers (although maybe they wouldn’t read it at all) is that you have to choose your data structures. The C++ STL contains many wonderful things, but because of its genericity and need to address many problems, if you’re writing something performance-critical or memory-critical or several other kinds of something-critical you can probably do better with something rather more specialised.

For the benefit of certain programmers in the world, I would like to point out that std::string is particularly unsuitable for carting data around in high-performance network software.

You’re right; also well-written specialized code is more concise and expressive, so in the long run it tends to be more readable and easier to maintain due to less boilerplate junk mixed in.

I think that many programmers tend to use what’s there, or what they already know. That’s usually do-able, but there may be a much more elegant solution available depending on what your needs are.

I’m loving your blog, it’s great to get insights from ‘behind the curtain’ so to speak.

Great read :)

They were (and maybe still are) a similar technique to this in the Linux kernel.

For being a simpleton, I find your solution a bit hard to digest. This can be because I don’t get how to implement your solution into a simple test application, but it looks way to complicated than I need it to be.

Considering using smart pointers along with std::list for me have worked, although I admit in a small scale game. Would I benefit from using this solution even at such a scale?

Nice article. This is the same idea used in the Linux Kernel (http://lxr.linux.no/linux+v3.5.3/include/linux/list.h) It is a very neat trick and maybe you can add RCU to your library for better scalability :)

Nevertheless, it’s interesting how concepts from Kernel arrive to user space from time to time and are dismissed again and again :)

I’m not sure this concept came down upon us from kernel programming. I’d rather say it came from CS 101, but with a macro (and/or some template stuff) to save typing.

My bad, I was confused by the images, recalling some from the kernel.

Spelling nazi: the first word of your article is wrong :-)

There are also multiple instances of the misspelling “inStrusive”.

also, “most all” should be “almost all”. compare: “most dead”, “almost dead”; “most full”, “almost full”.

Thank you all! I think I rushed this one, and appreciate the help.

Brilliant article.

Reading comments here and in reddit make me lose hope on humanity.

Why cant people understand that designing your game using this pattern provides O(1) modification of a list state?

Especially in starcraft, selection of units, deselection etc etc would provide you modification of a list in O(1)!!

People can’t clearly think outside the box and realize that lookups are never needed using this technique, since the list itself is part of the structure.

This reminds me of sys/queue.h in FreeBSD: http://www.gsp.com/cgi-bin/man.cgi?section=3&topic=TAILQ_HEAD. They are macros for creating and manipulating singly or doubly linked lists and tail queues. They basically do the same things you describe here (except for the automatic destructors, of course), but for C programs. And you are right: when I started programming on FreeBSD systems these macros helped me write much less buggy code than I otherwise would have.

Even though I’m a Java programmer by trade, those macros in FreeBSD made it really easy to knock out assignments in C without too much fussing over the linked lists. If I ever work on a C or C++ project I’d probably implement or borrow the idea, It’s wonderful compared to straight linked list management.

Your unintrusive list structure, and your library is the way I was learnt to do linked list in C in 1993 doing my bachelor in physics …

It is also the time I tried to write C++ code for a computer simulation and was scolded by my mentor for not being KISS, and that C++ was way to unreadable to be usable in a lab context where things needed to work fast.

People grown up as CS to write code, and physicist grown up to maintain other’s code may not share their vision on what is important in coding.

With all due respect, I feel like your justification under “Why not use boost intrusive lists?” doesn’t hold much water. As far as I can tell the only difference between your library and boost is that yours is “simpler” and has unlink-on-delete semantics (which could be argued as harmful). Either way, you could get the unlink-on-delete semantics by creating a 5-line derivation from list_member_hook.

boost also has the advantage of being a common language that all C++ developers speak. New developers working with your list will have to adjust to new vernacular (why is Head() not called front()?), new paradigms (why isn’t there an iterator?), and so on. I understand the development scene was much different in the era of Diablo I and it was probably justified then, but in 2012 it just seems like a reinvented wheel with fewer features…

I’m not sure all the C++ developers would agree to boost being a good thing. I for one try to stay away from it as much as possible, because I’m not particularly fond of spending more time compiling than writing code.

There’s really no reason not to roll your own intrusive list. It’s easy to write, you can do it as a library, you can use the STL idioms if you want and it will still be done in under a day. Your compile times will not be affected and you won’t have to drag 200 MB of external libs and headers around with your project. Same goes for spawning threads, lexical_cast, command line flag parsing and the other trivial things that people normally want from boost.

You make a fine point re: common language, though when I first started using linked lists in the 90’s, not only was this (invasive) the only method people I knew used but “Head” and “Tail” were the common terminology. I still find begin/end front/back a little weird. :)

re. reinventing wheels: I’d be interested to see a speed comparison, both of compiled code but also (and perhaps more importantly in the field of gamedev) compilation times.

JAVA Ftw

There is value to having a common language for developers to share. One of the problems with modern C++, STL and boost is that language is too big, and requires too much specialized knowledge, for programmers to be effective.

To the extent that we can simplify the concepts and reduce the knowledge required for new team members to join the project, we can increase their productivity.

It’s interesting to look at (for example) the alternate implementation decisions between C++11 and ObjectiveC for lambda functions (http://www.mikeash.com/pyblog/friday-qa-2011-06-03-objective-c-blocks-vs-c0x-lambdas-fight.html). C++ is much more powerful in that it gives the programmer total control, whereas Objective C trades off power and speed for simplicity of programmer interaction with the block.

I think that these are the types of tradeoffs that we need to make as programmers to be more productive as a team. In this case that means choosing to use code that’s simpler than boost intrusive by a large margin.

With all the respect to the creator of my favorite game, I have to disagree.

I don’t know enough details of your implementation, but the boost intrusives also work as standard std containers in almost all ways. It can be used in for each, you can use iterator-based operations on those etc.

I believe, that the advantage of not having to use and learn different version of custom linked list when moving from project to project plus the configurable design of the containers beats the custom implementations even when the boost version might be harder to get the first time.

Well the great thing about programming is that you can write your game the way you & your team feel will work best.

Boost makes it harder to debug the code through all the layers of abstractions it introduces. That alone is enough reason to stay away from it. Coincidentally the only programmers I know who think Boost is a good idea don’t know how to use a debugger and are still printf() debugging…

There are also many other good reasons not to use Boost, you can try using some of the classes (not intrusive list, but e.g. boost::signal) with a tracking memory allocator and you’ll see that some of them do hundreds of allocations for a simple instance. Not acceptable for game programming, and since it’s not documented which classes have this kind of overhead you’re left trying to figure out the overly complicated implementation. Not to mention compiler compatibility with custom ports of gcc and whatnot.

Maybe the fact that NIH introduces many more opportunities for error is the main reason to care about them “undebuggable abstractions”. I don’t buy it.

I see the argument for boost.

It’s presented as one giant monolithic library.

I really think it would be better as 30 separate libraries.

Just the dread of having to download it and compile makes me want to stay away from it – and I run Gentoo.

Except you don’t have to compile it for most things. e.g. Boost Intrusive is header only (obviously). Downloading takes ~15s¹

As far as the “giant monolithic” argument goes, I don’t think it’s true.

On the one hand there are e.g. Interprocess, Graph, Signals, Intrusive, ICL, etc. which are independent (and you have BCP to extract them).

On the other hand, there’s a clique of “foundation” libraries that wouldn’t really work as separated components (perhaps for similar reasons they’re not in the standard): Mpl, Fusion, Typetraits, Utility, Variant, Optional. Having them separate would create combinatoric explosion of test configurations and brittle versioning.

The only culprits I see right now are Boost System and Boost Thread maybe Boost Chrono); they induce some coupling. However, Boost System/Chrono allow header only configs though.

I think the versioning/QA story explains how the library collection evolved. I think it allowed iterations that wouldn’t have been feasible with separate versioning/roadmaps.

Perhaps it would be nice to have a “Boost Core” library and separate surrounding libraries. On the whole, though, I think it’s ok as it is.

—-

¹ (full compilation takes ~10 minutes on my linux box. Granted with MSVC it’s terribly slow)

You ought to be using custom allocators which renders the amount of allocations issue mote. (This is core C++ and is not introduced by Boost.)

You don’t even need the 5-line derivation from the list hook with boost.intrusive, just set the hook’s link mode to “auto unlink” and you’ll have unlink-on-delete semantics (without locks). It even warns you in the docs about the dangers of deletion from multiple threads using auto-unlink.

my reply is late by 2 months, but I started coding in 2003 and back then we were still using Head and Tail. I’ve never even heard of anyone using Front and Back. Although admittedly I haven’t dealt with any linked lists recently.

This article wasn’t all too convincing. You just like your own solution more. Actually your solution forces the developer to do work on every struct manually, where you could have just written a framework around the std::list and would be okay. If you want fast access to all the links referencing an object, why don’t you just write an additional hashmap that keeps track of what is referenced where? O(1) access for deletion and no reason to blow up your structures with unnessesary fields.

If you really need a functionality like deleting objects from a list by referencing the object, why is your first solution not to write a general framework anyway? This way you have your bugs only in one place. And have just one function that might be efficient for your use cases or not and can be rewritten to do other things underneath if nessesary.

Why would you write a “framework” around std::list? What’s so cool about it? std::list allocates once per element, on top of the element itself. Also, why would you have an extra hash map, taking up extra space and causing extra allocations, to do the same thing as an intrusive container? Instead of allocating just the element, you allocate the element, the wrapper structure for every list it’s in, the wrapper for the hash map element and possibly the elements of the list that the hash map contains. Then your game does 3 FPS on God’s Own Computer, and you’ll start complaining that malloc() is a crap function, because it’s at the top of your profile for no apparent reason.

std::list, std::map and std::set are ok when performance doesn’t matter, but that extra allocation per element can prove disastrous in the real world.

Instead of focussing on all these details let me repeat my main point: You always trade advantages for disadvantages. Don’t think good or bad, think I-give-this-for-that. That’s one key difference between efficient coders and normal coders. If you think that your method is way better then another method, then you are wrong. Not because your method sucks, but because you think “it is better”. It is, but only in some edge cases. Yet it is worse in others. Learn to look both ways at the same time!

Those “details” are the reason why this solution is better than std::list in this case. Therefore, I think it’s important to focus on them rather than on truisms about coins having two sides and solutions not being universal.

Actually std::list doesn’t allocate “once per object, on top of the element itself”. It allocates once per object. The object in the examples above are pointers but that doesn’t have to be the case. In cases where your objects only exist in one list at a time you could simply have a std::list instead (& the O(N) removal would be O(1) if you handed around std::list::iterators instead of person pointers)

But doesn’t std::list copy the object on insertion? What if you put your object into multiple lists? You would end up with many copies of a potentially large object . Instead of a simple delete, you would also have to remove your object from each one (just finding the object in each could be very slow if its large and a lot of things has to be compared).

The big difference is that the intrusive list allows one allocation of the object for all list whereas a std::list et. al. approach would require a separate node allocation for each container and would have to point to the node, be type T* not T.

BFHD … until you need to garbage collect. Now you have one list of stuff to scrub. That part wasn’t clear from the article but that is the big gain from the approach.

And one more thing you should be careful about is, using offset macro with non-plain old data type is undefined behavior. It may works with almost of (relatively) simple object, but can be problematic in some complex case (like virtual inheritance).

IMO, Maybe the most parts of “unreadable modern C++ templately bollocks” in the boost intrusive list are needed to handle these kind of edge case properly.

Oh the comments … I guess you shouldn’t have called out those college kids. Now the reddit and hackernews kids are enraged ;)

std::list has remove() method which does exactly what erase_person() does.

Also, erase_person is a bit incorrect because it uses invalidated iterator.

I think the reason for including erase_person, even though it’s redundant, is to illustrate the linear complexity of that operation (or remove()) on a std::list.

Also, you’re correct about the invalidated iterator, should be something like:

std::list ::iterator it = people.begin();while (it != people.end()){ if (*it == ptr) it = people.erase(it); else ++it;}

for (it = people.begin(); it != people.end(); ++it)

if (*it == ptr)

people.erase(it);

Well, that trashed my formatting, and I accidentally kept the pasted original “for.”

https://docs.google.com/document/d/1J4_5RWTcJoxXi-Hi_hW5Ks8R9QYpYQXFskwYEjK-IRw/edit

You should post this as a gist on github.com – perfect for snippets like this …

https://gist.github.com

One nice feature of the C++ spec is that it has big-O complexity requirements for algorithms and container functions, so it’s easy to see what the cost is up front. Of course, this should be balanced by the unstated problem of cache misses, which can affect performance far more than big-O complexity in real-world scenarios. This is one reason I actually do use intrusive lists at times.

Yup, you’re right. I copy/pasted the std::list code from the blog article I mentioned but should have fixed the bug. Didn’t spot it, my bad. Fixed now. Of course this type of problem doesn’t happen using intrusive lists :)

Actually, it *doesn’t* do exactly the same as his erase_person(). It’s an O(n) algorithm, because it removes *all* equal members from the list. This highlights an important point about std::list: it doesn’t guarantee uniqueness, which is kind of why you shouldn’t be using std::list in the first place for the problem described in this article. It’d make far more sense to use a std::set.

Lovely write-up, Patrick, cheers. One thing: In the reduced-contention destructor/unlink case, it’s maybe worth mentioning that that will only work reliably if “Unlink” is okay with being called with an already-unlinked element =)

Presentation pedantics: pre { overflow: auto; } – your preformatted content’s overflowing the right boundary and disappearing.

The unlink function is safe when called whether the object is linked or not. One thing that isn’t obvious — even after I wrote a giant wall-o-text — is that it’s possible to do things like link an object to a new list and it will automatically unlink itself from an old list — safely. I’ll have to add more examples of use cases in a future article.

Many thanks for the note about pre-block overflows; I’ve fixed it as you suggested.

Wow. That’s some near perl-esque magic, right there =)

Good article that show that one size doesn’t fit them all.

The article could be improved by stating what your are trying to achieve. IIUC “adding or removing an element from one or many set (represented as a list in this case)”. IMHO, it would avoid quite a bit of confusion.

And then I doubt anybody would argue for std::list.

Agreed. I think I’ll have to include more use-cases in the next article by way of explanation.

I have a fair amount of experience with intrusive lists, and have arrived at a similar implementation, but I thought I should mention one improvement: Using pointer-to-member syntax (defaulting to using a member called m_Node) you can avoid the type-unsafe offset that you have in your list template classtemplate<class Item_c, IntrusiveListNode Item::*PtrToNode = &Item::m_Node> class IntrusiveList<class Item_c, IntrusiveListNode Item::*PtrToNode = &Item::m_Node> class IntrusiveList

… I think it is a bit more elegant

If I understand correctly, you’re adding an extra pointer field in the link that points to the object — that seems like unnecessary overhead; you’re storing three pointers per link instead of just two (prev/next).

Sorry the code got mangled – it’s just templating over the pointer-to-member so it’s exactly the same as using offsetof, except it is type-safe:

template

IntrusiveListNode { Item *m_Prev, *m_Next; };

template

class IntrusiveList …

damn, how do you post code here?! it’s treating the template definitions as HTML and adding things to it that shouldn’t be there…

I don’t think he’s suggesting adding another pointer. Take your original TListDeclare template, but instead of passing the offsetof as the second argument, pass the pointer-to-member of the TLink member. You can then use that pointer-to-member to access the relevant TLink member of any person object.

I sense you’re more comfortable with older C++ coding paradigms due to the bare pointers and not using auto-unlocking objects with your mutexes. Some of these operations could be expressed much more eloquently that way. That said, this article does a good job of motivating some good use-cases for intrusive lists over std::list.

Those would be fighting words in some parts, but I’ll try to address the intent of your message instead of the way it’s worded — old school indeed :)

While I could have used classes that acquire locks and release them on scope-exit (RAII code shown below), I consciously chose not to do so because the locking behavior would be hidden:

{

ScopedLock lock(&s_pendingCritSect);

… do stuff …

// destructor for ScopedLock releases lock on s_pendingCritSect

}

By using more explicit notation, it’s possible for novice programmers to understand what’s going on. Incidentally, most of the code in Guild Wars 1 was written in that “old school” style — we started writing that code in 2000.

> While I could have used classes that acquire locks and release them on

scope-exit (RAII code shown below), I consciously chose not to do so

because the locking behavior would be hidden:

Please forget C with classes and go learn C++.

> By using more explicit notation, it’s possible for novice programmers to understand what’s going on.

So, you call yourself a novice programmer? At least one fact we agree on.

Really? Is that a constructive thing to say? Does that make you feel good? How about we keep the dialogue at a level where we can all learn something.

q:”I consciously chose not to do so because the locking behavior would be hidden … it’s possible for novice programmers to understand what’s going on” — does that statement refer to code posted in a Blog or to production code? I’m all for making it easy for novice programmers, *but not at the expense* of having all code look like it was written by novice programmers! (And, especially ScopedLocks were really, really easy to get for really everyone around here. I’d go so far as to say you shouldn’t be writing a single line of code unless you can understand what a simple ScopedLock does.) ((So, hmm, now, was that my someone’s-wrong-on-the-internat post for this day?? :-) ))

i’d recommend reading the michael abrash graphics programming black book – he has some good examples of this sort of list implementation, with performance/memory enhancements

generally though i’d say avoid linked lists – they are seldom the right answer – certainly in performance critical code where having things in contiguous memory is much more important for performance – and usually results in simpler, easier to maintain code as well.

also, sorry to say it, but the approach to thread-safety is a little repulsive. locks? really? good design can usually avoid needing that kind of heavyweight solution…

I second your recommendation of the Abrash book.

Having written most of the servers for the Guild Wars backend, I cannot imagine not using linked lists — they’re so useful for dealing with objects where you don’t need random access and the data is unbounded.

Finally — can you propose an alternate data structure for the Slowloris problem that has the same properties but doesn’t require locking and has unbounded size?

I guess the need for locks is because you’re using IOCP and so can’t do any thing at a per-thread level? I think the alternative solution here is to change the workflow rather than trying to find a better data structure. Or simply rely on an external load-balancer to deal with the issue so your code doesn’t need to consider it at all.

Link to the book. :)

http://www.phatcode.net/res/224/files/html/index.html

While I’m generally against liniked lists your Slowloris example is probably the best example I’ve seen of where they are a good choice.

You’re not iterating through the entire list all the time so you avoid the major reason not to use them.

This is of particular interest to me as I tend to use std::list for game objects, though the objects themselves have components added using the decorator pattern (meaning intrusive lists).

For prototyping and game-jams I use HTML5, and so far haven’t found an efficient way of doing through my Array of objects to update, building a subsidiary Array of those I want to delete and then splicing those indices out of the main Array. It’s filthy as all hell and not a particularly efficient solution: I think I might reimplement something similar to yours (but in JS) in the future!

Using std::list iterators you can have O(1) deletion.

I believe you may be able to duplicate all the functionality present in this article by using iterators, and with less code.

What you’re arguing is that each object linked into the list needs to store its own iterator object, in addition to the prev/next pointers. While it’s certainly possible to do that it is doubtful whether there is less code to write, and it would use sizeof(iterator) additional memory for each link.

There’s no need to store the prev/next pointers. Those are provided by the iterator, which is bidirectional. You only need the iterator.

Also, sizeof(list::iterator) is probably 4 bytes:

http://gcc.gnu.org/ml/libstdc++/2004-02/msg00113.html

Meanwhile, you won’t need to write the classes present in this article, or utilize the preprocessor commands you’ve described, or manually store prev/next pointers, or suffer from using a container which doesn’t define standard stl interfaces or iterators…

For instance, moving to C++11, those using a std::list will still be able to:

for(auto iter : some_list) …

Exactly what I was thinking, change the functions to accept iterators and you’re done. +1

From the std::list specification:

> Lists have the important property that insertion and splicing do not invalidate iterators to list elements, and that even removal invalidates only the iterators that point to the elements that are removed. The ordering of iterators may be changed (that is, list::iterator might have a different predecessor or successor after a list operation than it did before), but the iterators themselves will not be invalidated or made to point to different elements unless that invalidation or mutation is explicit.

So, you can get all the functionality you desire by using std::list as it was designed. If you store the iterators then insertion/deletion is O(1), achieving the same “intrusive list” functionality as described in this article, with far less effort and using standardized structures.

You’re right, *if* you’re willing to store the iterators with the links. But why waste the extra memory?

I wouldn’t say “far less effort” because you’ll need to write a std::list wrapper that manages the iterator automatically, and you’ll likely end up with the same sort of list library I’ve created here.

There’s no need to store the prev/next pointers. Those are provided by the iterator, which is bidirectional. You only need the iterator.

Also, sizeof(list::iterator) is probably 4 bytes:

http://gcc.gnu.org/ml/libstdc+…

Meanwhile, you won’t need to write the classes present in this article, or utilize the preprocessor commands you’ve described, or manually store prev/next pointers, or suffer from using a container which doesn’t define standard stl interfaces or iterators…

For instance, moving to C++11, those using a std::list will still be able to:

for(auto iter : some_list) …

And, I don’t see what sort of iterator management would be involved other than simply storing it. The STL guarantees that std::list iterators are both bidirectional and stable.

“store iterators with the links” – I’m not sure what you mean by that.

In the case of only pivoting on one axes (only having nodes in one list, not multiple simultaneously) you don’t store the iterators anywhere – you have list and where you were handing around person* (at least the ones you care to use to remove elements) you hand around list::iterators.

If you’re pivoting on more than one axes, your solution becomes more relevant, though there’s still some considerations to make before choosing it over other structures. (tradeoffs, always)

You’re going to waste that memory anyway trying to enforce uniqueness (either that, or a ton of CPU).

Looks cook. Though I’ve heard about entities which leave outside of lists normally too :)

std::list is a node-based structure, so using it to store a pointer to a uniquely allocated heap item is a bit silly. The implementation of “node” underlying a std::list is typically struct Node { Node *next, *prev; T t; }; This is an identical memory layout to your “intrusive” list if you declare std::list instead of std::list. The only difference I can see is instead of handing bare pointers around (person*), you hand iterators. This is probably a better programming practice anyway: bare pointers come with a lot of baggage in C++ (is this transfer of ownership? can the pointer be NULL? is it safe to cache this pointer for later use?).

Indeed – this is probably unambiguously the right thing to do if you only have a single list you need to put things in. The above intrusive data structure becomes more compelling (though still not without other considerations) when you have multiple axes on which you need to pivot your data.

(also, unlinking elements from a std::list to put them into another std::list isn’t so easy – it’s possible with std::list::splice but you may end up carrying around a little baggage (sentinel nodes in the std::list))

If you need multiple ways of looking at you are data, you are better off with something like Boost’s Multi-Index containers.

Fair point.

Can be an issue when disparate parts of your system wish to index in different ways – but all the proposed improvements involve tradeoffs of abstraction/space/time.

Good article, but it bears mentioning that std::list has a remove() method, and there’s a remove() function in the algorithm module as well. The opening to the article would be better if it at least mentioned this. As it is, it seems like no one bothered to read the docs for std::list.

The remove algorithms only really improve the situation where you have multiple elements that satisfy a condition (but no idea where those elements are in the sequence) to remove. Then you use “erase+remove” and you end up with O(N) instead of O(N^2).

In the example above, it wouldn’t help performance (though it’d be much shorter/easier/less error-prone code to use std::list::remove, you’re right there) since only one element is being removed. The way to improve performance would be to deal in std::list::iterators instead of in raw person pointers.

I absolutely agree. I was mostly responding to this portion of the article:

“More importantly, it’s necessary to write list-removal functions like the code above, which takes programmer dev-time, slows compilation, and can have bugs. With a better linked-list library, this code is entirely unnecessary.”

Agreed

But how do you ensure there is only one element in there in the first place? std::list doesn’t guarantee it, because it is the wrong data structure for that job! std::set would make much, much more sense.

True – though not always the right time/space tradeoff. In this case Sean was just talking about a narrow point from the original article. There are certainly cases where you want to remove the first element that equals some specified element.

But yes, fair point about other containers being worth consideration in the broader case.

Missed the Disqus update on this. Replying now, though the subject is dead.

Doesn’t the original article’s implementation *also* need a way to impose uniqueness of elements in the container? It seems to me that it skips over that issue entirely.

std::set mostly suffers from some really poor implementation choices in most standard libraries. In practice a good implementation should perform at least as well as a linked list (which makes sense, as the contract it imposes is effectively the same one would want to impose, and there is no good reason not to have the implementation be as efficient as possible for that interface). The main problem I can imagine is if you had an additional constraint about preserving ordering (of course, there are container interfaces for those too, but they aren’t part of the standard library).

If you are stuck with a crummy std::set (as most people are), using std::unordered_set helps, or alternatively something like Google’s cpp-btree library (https://code.google.com/p/cpp-btree/).

I wonder why lists are used so often. In my experience lists are most of the time the wrong type of container. In our not so small codebase there is only a single list in a rather specific algorithm that needs the splice operation. If you do not need splice you do not need a list.

For example why should one ever want to store a person in a Persons list as shown in the article? Most of the time a vector is better. Sometimes a hash map or map are necessary. But nearly never a list.

For personnel which for most organizations I would imagine does not change very often, yes. For games which have objects constantly popping in and out of the world, no.

I do not think that games are special. Every non-trivial software constantly creates and deletes objects that have to be kept in containers.

Games are special. For example Kalimdor realm’s map of passable points 1×1 yard can easily eat 2GB RAM. You low in every resource you have. Making ordinal C# like objects tree “this class represents my intentions, this ancestors representing good names and architecture … after 200 files with empty classes there is real class with code ‘return (a);'”. By multiplying you class size in memory on units or players amount you finally figuring there is several machines from “top 10” list able to execute your program in real time.

And by opening your program in decompilers you can find that there hundreds of useless jumps per useful line.

Your example STL code sucks… no wonder it works and performs worse than custom list implementations. Using std::list (instead of Person*) with an id field, and proper algorithms (std::remove_if) preserves efficiency, correctness and code quality.

You have no idea what you’re talking about. The original problem required an object to be part of several lists and O(1) deletion. Your “solution” ties the object to a single list and has O(N) deletion. 0/2 goals met, nice.

Personally, I think a better solution (at least in most cases) to the N/2 problem would be to use a hash in your linked list implementation so that you could look up the link object associated with the memory address of the object being linked in O(1) time. It would add memory overhead, and still isn’t really as fast as your implementation, so it’s not a perfect solution. But it would run acceptably fast in most scenarios, and it saves your from the pain of having to manually having to muck with the definition of classes every time you want to add them to a new list.

A thought-provoking piece, but there are various points where I feel like your ‘better solution’ smells a bit. In particular, the use of `offsetof` is not very nice. It seems a better solution would be:

template<typename T, TLink T::* link_member>

class TList {

…

};

This can then be instantiated with:

TList employees;

This at least adds considerable type-safety to your version.

Sigh. It seems disqus makes rather a hash of template code in comments. I’ll try again:

template<typename t, TLink<T> T::* link_member>

class TList { … };

TList<employee, &employee::managerLink> employees;

…but then, compared to the horrific pointer arithmetic in the TLink implemented in List.h, the use of offsetof is the least of your worries! Anyone on my team who submits code like this for review can join the queue for the public floggings.

Why use the low-order bit of a pointer as an end of list sentinel instead of (T*)0 like the rest of the world?

Patrick, your solution offers O(n) deletion if you do not have a pointer to the object, or O(1) if you do. std::list gives O(n) deletion if you do not have an iterator to the object, or O(1) if you do. The O(n) vs. O(1) difference you’re claiming doesn’t exist when you compare like with like. Your socket example where std::list “breaks down” only breaks down because you’ve chosen to use std::list inappropriately.

Your diagram that shows the object and links being stored separately for std::list but together for yours is also the product of not comparing like with like – you’re comparing an instrusive list of objects against a std::list of pointers. The extra indirection in your std::list diagram is the one you explicitly added by storing pointers to objects rather than objects.

I do, however, like the idea of intrusive lists for the case where the same item needs to be stored in multiple lists, but that introduces the extra complexity of tracking when the item is unlinked from the last list it is a member of. –ApplicationSpeed. ++CodeComplexity.

The technique you’ve presented is interesting for a specific, niche, use-case but your general point that “If you’re a programmer who uses the C++ STL std::list, you’re doing it wrong” is hyperbole.

You (and other commenters) are right that if I had to search the

list, it would be O(N). It’s unstated, but we all know that linked-lists are not a good random access data structure. But as you can see from the Slowloris example, there’s a mechanism where my code is getting called back and “given” a pointer to the object, so it isn’t necessary to search for it.

The idea that I didn’t do a good job conveying is that the objects to be stored will have multiple linkages, and be stored in several lists, hash tables, priority queues, and what-have-you, so using std::list instead of std::list doesn’t work.

Once you have such an object, and need to delete it from all its container classes, intrusive lists (and hashes, pri-queues, etc.) can do the operation in O(1) instead of O(N), which is what makes linked lists of 20K items — where the iterator is not saved — actually workable.

Your point about the language I used is, unfortunately, correct. What was intended as exuberance in explaining why intrusive lists are useful was

unnecessarily inflammatory. Whoops. My apologies.

I agree that your implementation is a nice way to solve the problem of storing the same object in multiple lists. It’s a potentially very useful technique – before reading this I’d probably have jumped to hash tables without considering intrusive lists. My objection is that it’s inferior to std::list outside of that use case.

Rather than saying “If you’re a programmer who uses the C++ STL std::list, you’re doing it wrong” I think it would be more accurate to go with “If you’re using multiple std::lists of pointers to the same set of objects you’re doing it wrong”.

As for the Slowloris example, it could have been built using std::list. Your Slowloris code is *given* a pointer, which makes the functions O(1). This implies that whatever is calling the code you’ve shown has some mechanism to find a pointer to the object – it’s either stored the pointer (in which case you could have stored the iterator instead) or it’s doing an O(n) lookup. Either way, I don’t believe the Slowlaris example is a use-case where your list provides an benefit.

> This implies that whatever is calling the code you’ve shown has some

mechanism to find a pointer to the object – it’s either stored the

pointer (in which case you could have stored the iterator instead) or

it’s doing an O(n) lookup.

That’s not true: if your objects are accessible from 10 different lists, you’ll only get an iterator for the list you were enumerating, but not for the 9 others. You’ll have no way to make the object disappear from those other lists without enumerating O(n) elements.

WHY NOT USE MAGIC??

Wow the internet is harsh. Looks like most C++ programmers will only move away from STL::List if they start writing games.

If you delete ptr; you’re doing it wrong. Smart pointers didn’t exist then, I grant you, but it’s not something you could get away with advising now.

But secondly, the main thing I’d say is that I felt that you didn’t discuss many alternatives, like storing the pointers in a hash table for O(1) removal, and your lead-in about using std::list wrong is a bit hyperbolic. All you’ve said is that the Standard linked list class is the most generic linked list class possible, and you had a special need which made it more useful to not do that- which is fine, and perfectly true. But it doesn’t make using std::list wrong or existing use cases of it wrong.

Whether you delete the pointer, or use a smart pointer to delete it, eventually the object gets deleted. At that point it needs to remove itself from linked-lists, hash-tables, trees, priority-queues and what-have-you. *That’s* when intrusive data-structures become useful, because the object destructor then doesn’t have to use O(N) removal algorithms for the linked-lists.

Hyperbole: guilty as charged.

Oh, the pains of C(++). Glad I elect to use higher programming languages.

In any case, why use lists at all if you need quick random access? There are plenty of other data structures.

You wouldn’t use lists in the case you need random access. But that doesn’t mean there aren’t plenty of cases for using linked lists.

Hmm … I’m wondering whether needing to hold — own — objects in multiple datastructures at once is really such a common requirement? (And aside, would YOU use shared_ptr nowadays? – And if not, why?)

Well it looks like you got lots of people thinking. Thanks for taking the time to share that with us. We can debate forever of what the best solution is in different contexts, at the end of the day it’s your awareness of the various approaches and forces at work that will make a difference. And you’ve increased mine successfully and pleasantly. Keep it up!

Great article but you miss one important point. You said that bucket is bad container for human hearts. You forget that bucket is best container for sand :D

First of all, in how many TList you can have one object? only one per TLink. This is great limitation that std::list cant have. Using std::list you can have unlimited number of list that have same object. In case of SC & GW this property of std::list is useless, and your implementation is superior in that case.

There’s a formatting error in the article:

‘struct person { TLink link; // The “intrusive” link field unsigned age; unsigned weight; };’Is all on one line, when I believe it should be 4

Thank you; fixed.

Moron.

Very interesting articles. I’m looking forward to part 3. In fact, I’ve been waiting feverishly for it! :)

Great articles! Can’t wait for part 3!

this probably isn’t relevant, but i thought it was interesting – if you’ve just got a list of pointers (not big objects), it might be faster to stick them in a vector instead of a list. I’m looking at slide 45 here: http://ecn.channel9.msdn.com/events/GoingNative12/GN12Cpp11Style.pdf

Appreciate the article, cool insight into some of the design decisions you’ve faced. Also, i have fond memories of playing starcraft many hours of starcraft years ago.

However, I’m not convinced your final example for destructing and unlinking an element is threadsafe. link.IsLinked() is not an atomic operation. Seems that one thread could be linking an object while another thread is entering the destructor, leading to the deleted object not being unlinked.

I suppose in practice, you must have mechanisms in place to ensure one thread is not modifying an object while another thread is deleting that object. But if that is the case, I’m not sure what value the locks in the destructor are providing.

Looks like I’m about a month late to this party, but oh well.

Unlinking is not a thread-safe operation, which is why all the list operations are performed only when the s_pendingLock critical section is locked.

Thanks for sharing this, although i’m a beginner in programming I think I’ve learned a lot from this. I still have my copy of W2: Dark Portal with me :)

thank you.

Best regards: Joyfax Server

I would like to point out that std::string is particularly unsuitable

for carting data around in high-performance network software.

Isn’t this thread-safe deletion just wrong? If you need locking there it means object is being deleted multiple times. Can’t imagine how it could be corrected without introducing new issues.

Still thanks for a great article. It’s astonishing how quickly standard containers become unusable when performance is really important (and that’s why we use C++ afterall).

With all due respect, if I understood your implementation correctly, it seems like your implementation is making the “next” pointer as an odd numbered address ( by adding 1) to indicate that the pointer is an un-linked pointer.

It is for sure if byte alignment is even numbered byte alignment such as 2 or 4 (8 or 16), however I was wondering if your implementation also satisfies when byte alignment is 1 byte alignment ( ex. #pragma pack(1) )

With all due respect, if I understood your implementation correctly, it seems like your implementation is making the “next” pointer as an odd numbered address (by adding 1) to indicate that the pointer is an un-linked pointer. It is for sure if byte alignment is even numbered byte alignment such as 2 or 4 (8 or 16) byte alignment, however I was wondering if your implementation still satisfies when the byte alignment is 1 byte alignment (ex. #pragma pack(1) ).

You’re correct that I’m using the lowest bit of the next pointer as a sentinel to indicate end-of-list. Incidentally this is the same trick that the Boost library uses, though Boost wraps it in several layers of template indirection so it’s hard to see right off.

You’re absolutely correct that, for this trick to work, it’s necessary for the memory manager to only return memory that’s aligned on an even memory address, and further that any structures which are defined using #pragma pack(1) must carefully align members to ensure that linked list nodes (TLink) do not start add odd memory addresses.

Any general-purpose memory manager which returns allocations that are not aligned on *at least* a four-byte boundary would be very poorly written. There are substantial CPU clock-cycle penalties for reading and writing non-aligned memory on some processors (ex: writing a dword to 0x…..3), and some processors will even generate an exception. Specialized memory allocators can return whatever they want, of course, but would generally be used for byte-aligned data if they’re not guaranteeing alignment.

In regard to #pragma pack(1) structures, they should only be used for serializing data to disk or network where it wouldn’t make sense to include a list node. In the event that you do need to use a pack(1) struct just make sure that the list-node fields are dword-aligned (on 32 bit) or quad-word-aligned (on 64 bit).

Incidentally, the linked-list code I wrote uses assertions in three TLink functions to ensure that link pointers are never stored at odd addresses.

Whatever happened to part 3? I was looking forward to it. ^_^

Yeah, what happened to it! Oh wait, I was supposed to write that. Two things happened.

First, I got more busy — like, a lot more: writing code and advising several companies in addition to raising four kids. I’m constitutionally unable to not work so my free time is always trending towards zero. That’s why no articles recently, ssssooorrrry.

Second: this was my most controversial article. It occasioned quite a lot of negative responses because I killed several sacred cows without providing enough evidence for said slaughter. Example: coders all put out because I used the low bit of the pointer as an end-of-list sentinel. Actually, boost intrusive lists do that too but the gory bits are buried fourteen layers deep in (type-safe) templates so likely no one has ever read that deep into the code. But it means that I have to actually, y’know, write a scintillating and generally spectacular article to address those criticisms. Perhaps that’s why I can’t seem to write short blog posts, only book chapters. See — even this comment is long!?!

So that’s why.

But read my response to “Guest” below ’cause I did provide some details :)

I was having a look at your hash-table implementation, and as you point out, it doesn’t yet have functionality for growing the number of rows. I’m just wondering how you would add support for that — it seems like it could be a bit difficult, because in order to determine when to grow the hash-table, you would need to know the load factor, and thus the number of nodes in the hash-table.

What method would you suggest using in order to keep a count of the number of nodes? I can’t quite figure out how to do this. In theory, nodes could be removed without the hash-table knowing; i.e., if you call Unlink() on the TLink object of a node in the hash-table, either explicitly, or by deleting a node.

The solution used for growing the hash table in Guild Wars (which I didn’t write) was the same as the one used in Battle.net ~1997 (which I didn’t write either). Instead of evaluating the table load factor, it looked at the number of entries in a particular row. My recollection is that, when inserting into the hash table, the algorithm would count the number of entries in the insertion row and, in the event there were more than five entries it would rehash the table.

This solution has any number of downsides — it is susceptible to an attacks which can force table growth, a weak hash function can cause table-size explosion, and it’s necessary to traverse a table row on each insert. In practice none of these issues was a significant enough problem to warrant fixing. In particular, I prevented hash-table growth attacks for Guild Wars servers by preallocating table sizes based on the maximum expected (or allowed) table utilization.

The thing that the Coho hash table gets right from an engineering standpoint is that when objects are deleted they unlink themselves from the hash table automatically. But ultimately I’m not sure that it is the right implementation for a general purpose hash table. One of the features of modern CPU architecture is that cache misses cause long stalls, and so chaining down a list of pointers is horribly inefficient. I was planning on writing an implementation of closed hashing Cuckoo hashing that supported auto-deletion and running some comparisons, but — as evidenced from the lack of recent commits to the Coho library, I haven’t been working in C++.

It’s funny but when I write C++ I feel constrained to write the best, most efficient, more-or-less perfect solution, but when I write in other languages (Ruby, Elixir, C# are my tools of late) I seem to be able to focus more on the problem at hand!